Sunday, June 21, 2009

Changing My Blog Location

I've decided to try and consolidate my blog postings to my organizations web site www.datacenterpulse.org.

Data Center Pulse is an organization I founded with my brother-in-law. The goal of this community is to track the pulse of the industry and influence the future of the data center through discussion and debate.

I look forward to continuing the discussion and debate from this new location.

Regards,

Mark

Monday, May 25, 2009

What does the Cloud Look Like?

With all the talk about clouds, you'd think we would have a simple definition by now. However, since we don't I'm going to take a quick stab at what I think the different cloud types are and why their distinction is important.

Cloud Types:

Flat, Vertical, or Rainbow (I'm sure I'll come up with others later)

Flat: Amazon or Google clouds

These are clouds that represent a flat cross section of applications that are largely defined as Web 2.0. They are built around tools like Java and other web technologies.

Simple effective way of delivering and managing Web 2.0 applications in an efficient scalable environment. It's relatively easy to move or build new applications to take advantage of this cloud and make it available via the internet over any browser.

Problem:

Most companies have vertical applications. These are applications like Oracle, SAP, Seibel, Exchange and others that are not built on Web 2.0 platforms. These applications can't just be shipped out of your company's data center and installed at Google or Amazon. Nor can you send them your data to have it loaded to their platform. The work flows, database integration, third party application integration and other proprietary development make these applications unsuited for life on a Web 2.0 platform.

Vertical Cloud:

These are clouds built to deliver a specific application vertical or work flow. Salesforce.com might be considered an example of this cloud. Although a more definitive model would be SAP or Oracle selling SAAS (Software as a Service).

Pick the best of breed solution that accommodates a specific set of applications needed by your organization. You will still gain many of the benefits of the cloud in the sense that you will have a highly scalable environment that "should" allow you to only buy what you need when you need it.

Problem:

Depending on the solution there may be issues with integration into other applications you have. Your upgrade or patch cycles might become significantly more complex as you'll be negotiating time and process with your cloud provider. However, with time I'm certain these issues would be worked through.

"Rainbow" Cloud:

The All Inclusive Cloud or Tolerant Cloud. Personally I like the idea behind the rainbow cloud, as it allows companies to move into it one application or service at a time with the assumption that they can eventually load their entire set of applications into the environment. This cloud is best defined today by VMware's Vsphere solution.

Provides a platform that can accommodate all of the applications in your current environment, from legacy to Web 2.0. You also gain the flexibility of scale (up and down), portability (move from location to location easily). In this type of cloud environment your benefits increase with every application you add. You also simplify your support requirements as you don't have to have multiple providers/solutions supporting your virtualized systems.

Problem:

This is still a new platform as are all Cloud offerings so your choices must be made with care. My suggestion is to take the low hanging fruit approach of moving your low risk applications first and thoroughly testing how the environment performs and is supported (especially important if you’re buying cloud services as opposed to building an internal cloud). Over the course of 12-18 months use your natural update/replace cycles to help you move the rest of your environment.

Comments are welcome as always!

Friday, May 1, 2009

Cloud Computing will Drive the "Aware" Data Center

If the "Cloud" isn't a fixed location, or shape, how then does it fit in today's overbuilt data centers? The simple answer is, it depends. In many cases the "Fort Knox" or "Maginot line" data center of today doesn't make sense in a cloud enabled world. So what should the strategy be, I suggest that flexible, highly sensored, relatively low cost, but geographically dispersed data centers are where we should all be headed.

Data Centers should be built low cost but very well monitored and measured. Your application availability protection will be designed into the "Cloud" platform. This means you can build two data centers for less than the price of one high availability facility. It also means that with two facilities you can enable geographic diversity to help protect against disasters.

Now for the cool part. I believe our next step in the evolution of the Operating Environment or Cloud platform will be the integration of the "Platform" with the building management systems. The benefits of tying the two things together is that now your Data Center team will have visibility into the entire system and the system can be trained to protect itself. That's right, when the data center facility is failing it can tell the "platform" to move critical applications to a sister facility. This strategy of tying the building and the IT platform together will create a number of opportunities. The opportunities associated with an "Aware" data center are higher application availability, lower staffing requirements, much lower cost of ownership, and a system that can support and protect itself much faster than a person who has to be woken up at home.

I'll be talking more about this "system" approach to a cloud data center in the near future.

Sunday, April 26, 2009

Cloud Computing Too Expensive?

Cloud computing has many definitions as we are all starting to better understand. However, to me there is one guiding premise and that's "Efficiency". Efficiency is followed closely by scaleability and availability. The most interesting thing about those three words being together is that they can all be true if the cloud is architected correctly.

Why would we pursue the cloud option if it didn't buy us something? The question is "does it buy us something that is more than we currently have or just an alternative?"

To me the cloud represents an opportunity to make a small business' IT more like a large business. With the cloud the idea of geographic diversity and high availability should be a given. Neither high availability nor geographic diversity are a given for most small organizations. A traditional IT shop has to make pragmatic desicions about how much it can afford to do with a very limited set of IT and corporate resources. Generally that means delivering basic IT services, which most often doesn't include redunancy or easy disaster recovery or even better easy disaster avoidance. IT in small companies also has difficulty providing services to employees in far flung locations and even if they do, supporting them is a costly prospect.

So here's another question:

If you can build a replacement IT environment that does everything your old environment did for a similar or slightly higher cost all while enabling some of the additional benefits of high availability, redundancy, lower cost of ownership, and geographic diversity, wouldn't you want to do that?

To me high cost means I pay more for one thing than I do another but receive the same benefits. High value is when I can pay roughly the same cost for something new as I did for something old and reap many additional operational and strategic benefits. I've often heard people complain that VMware virtualization was expensive and I always ask "as compared to what?". How can something that drives down your cost of ownership and whose ROI is often times measured in months not years be considered expensive? The same should be true for the cloud!

If your cloud provider can't demonstrate how they can provide you a service with capabilities that exceed your current environment and do it for the same or less than you could, then they're probably doing something wrong!

More of the cost benefit of cloud next time.

Monday, April 20, 2009

Oracle Acquisition of Sun Microsystems Interesting!

My first thoughts after seeing the headline at O'dark 30 this morning was, good idea! Larry can now take over the only available competition for his DB (MySQL) and find more ways to expand on Java in his current and future solutions. This seems like a win to me. Then I read more and found out that Larry wants to expand into the hardware market and attempt to sell the whole kit and kaboodle, hardware & software into the data center. This, I believe is a failed idea. In pursuing this agenda Larry is literally going back in time. The entire world is moving towards the cloud. In the next two years the hardware layer will become even more commoditized that it is already. Why would you want to invest in your own hardware, when you can sell your software on everyone else's solution?

Just my 2 cents. I'd love to get everyone's thoughts on this.

Sunday, April 19, 2009

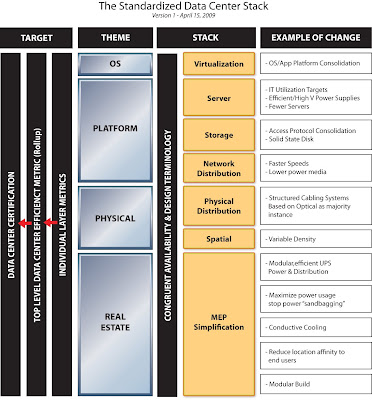

The Data Center is a System & The Data Center Stack

Whether you're concerned about where your power comes from (is it clean or how much loss does it suffer due to distance) or how a new blade chassis might affect your cooling, there's no way to deny that almost every aspect of the modern data center is linked.

The best way to get efficiency out of a system is to have a single owner. I'm proposing that all companies should assign a single "Data Center Owner". Ideally this person is someone who has a decent background in IT infrastructure, but also has interest or affinity for efficiency in general. Once this person is assigned you are much more likely to start collecting information about your "System" that encompasses the entire "Stack" Only then will you know what your system is costing you and what specific changes will mean to the performance of the system.

I would love to hear your thoughts on this issue!

Cloud Computing & Green IT is it "ALL HYPE?"

I've been in IT for over 20 years now and have seen technology become widely available that we wouldn't have dreamed about in 1989. I can remember thinking 1200 Baud on the modem was amazing and that a 1.2 Mb floppy disk was revolutionary. The first data center I worked in was about 1500 square feet and had a fairly large Unisys mainframe system that filled the entire room. This one computer did all the processing for our company of 1300 employees. Unfortunately all the work was scheduled and had to run in sequence, which meant customers would often have to wait a day or more for the results of their work. At the end of the week we would run a full backup of all the information and it would take the majority of a day to complete. Now I can get more compute power and twice the disk space in a hand held device that fits in my jeans pocket. That's right that huge (size) computer only had 16 Gbs of disk, you can easily get 2 to 4 times that much in a device that fits in the palm of your hand now.

In 1998 I can remember telling my workmates that one day we'd be able to treat the computers in a data center as one large computer, now 11 years later it's here in the name of "Cloud Computing". My naiveté of what it would take to make my little hope a reality notwithstanding, the hope was the right one. I have a personal perspective on what the cloud should be, but in the end it's about effective use of resources, better known as efficiency. Efficiency is also where "Green" comes in.

Now what sounds better "Cloud Computing" & "Green IT" or "Efficient, Cost Effective IT". I'm pretty sure I know which one you picked. I'm OK with the use of "Cloud Computing" and "Green IT" because these phrases help to galvanize our attention and interest, so in this case the ends justify the means!

Don't get me wrong there's much more to cloud computing than just efficiency and certainly there's more to it than I imagined when I first considered it in 1998. While there are many definitions of the "cloud", I'm going to focus on my version.

My high level definition of the cloud:

A platform of software that allows the user to utilize multiple computers and their assorted resources of disk, memory and CPU as one larger compute resource and to be able to access this resource from any location.

Why is cloud important in the above definition?

Today most applications are still run in hardware verticals, a defined, tiered set of servers, disk & network devices that support the different unique functions of an application (data base, app engine, web, etc). This configuration leads to very "inefficient" use of hardware resources, especially when you consider extra hardware needed for test and development or recovery. In general the average non-virtualized server in today's data centers is running at <6% utilization! If that's not enough to concern you, how about the other issues associated with this type of vertical architecture; - Lack of portability: Application instances can't easily be moved - Hardware dependence: Changes to the hardware platforms can force changes to the application - Difficulty in creating a new instance: In some cases the infrastructure to make one application work can take days to configure from scratch. Making recovery from failures or corruption time consuming and costly - Resiliency tends to be built into the hardare & data center, not the application - Maintenance of the application or server environment often times means down time for the customers - Each environment pillar/vertical is specific to an application If the cloud works the way it should you can solve all of the problems listed above and drammatically improve your efficiency!

- You'll potentially save millions on your data center infrastructure, while enabling higher availability, improved performance and increased scalability.

- You'll be helping to save the planet, while reducing your company's CapEx & OpEx overhead

If you're not currently looking into how you can leverage the cloud or be greener, then you should at least start by thinking about what you'd like your compute infrastructure to look like 2 - 3 years down the road. You'll probably find that the best place to start is with virtualization.

Tuesday, April 7, 2009

Why Data Centers are Slow to Improve

Without a dedicated resource in charge of ensuring the data center is all it can be, how can we expect the environment to improve? Without a dedicated resource there's no one going to Data Center conferences, taking classes on data center management or working with data center peers. In this environment the data center is relegated to being a "special" room. This special room creates a huge but relatively silent sucking sound as it sucks the financial life out of the company and that's not even the bad part! The bad part is that the rest of the world suffers as a result of our general mismanagement of this power and water intensive environment.

Sunday, March 8, 2009

Technology adoption in Data Centers is too SLOW!

Today power utilization in US data centers comprises roughly 2-3% of the total used by the entire nation. There are lots of arguments about why this is important or why it might even point to reduced power utilization in other areas (I.e., improved efficiency of booking travel without driving to the travel agents office). However, these arguments really don't matter. The fact remains that our data centers are a huge target of opportunity. This target isn't just because our DCs use so much power, but the fact that the vast majority of our DCs have potential efficiency gains amounting to anywhere from 25% to 80%. If we take the average between 25 & 80 of 52.5% you can begin to recognize the size of the target, it's HUGE!

How does the rate of new technology adoption play into this? Well, many of the solutions available today (Virtualization, Airside/Waterside AC, 480 Volts to the rack, Powering off Servers, Hot/Cold air isolation, etc., etc.) are proven and used in production environments. So, why aren’t we lapping up these technologies like a bunch of hungry junk yard dogs? Unfortunately, that's where it gets a little more complex. I believe there are a number or reasons, like myth, risk aversion, lack of knowledge, higher priority projects, limited or poor integration between IT & Facilities teams, and a large number of data centers without dedicated resource with specific data center roles and responsibilities. We also don’t have a common certification program for data centers, nor do we have a simple way for folks to measure their performance, other than the PUE or DCiE metric created by Green Grid.

Myth:

“I heard that doesn’t work” or “the risk to the environment is high”

Risk Aversion:

The data center is the beating (mechanical & digital) heart of the modern company, and we’re trained not to mess with it!

Lack of Knowledge:

Data Center staff (IT & Facilities) aren’t given the opportunity to learn more about what it takes to own a data center and own it efficiently.

Higher Priority Projects:

While in many cases there are projects that are “higher priority”, I believe the reality is that IT & Facilities often don’t truly understand the potentially opportunity of improving efficiency in the DC.

Limited or Poor Integration between IT & Facilities:

This issue helps lead to the problems noted in bullets 3 & 4. Because the two teams don’t work together they miss the changes that are currently affecting data centers. Unfortunately many of these “technology” changes have a facilities component and are therefore considered “Facilities” by IT and vice versa “IT” solutions which can improve facilities operations (like saving power) aren’t appreciated by IT.

Dedicated Data Center Roles & Responsibilities for IT & Facilities teams:

This is generally caused by one or both of the following; small size of data center doesn’t seem to warrant dedicated resource and that’s combined with a limited understanding of what improvements can mean to the bottom line for the company.

Certification & Metrics:

This is a major topic all by itself, so I’ll cover it in more detail in an upcoming blog. See my previous post for information on the effort I have underway to develop certification for data centers.

How do we solve these issues?

Our IT & Facility leaders need to work closely together to identify, vet and propose new solutions for the data center.

Our Leaders need to convey an expectation that risk can be OK. The Data Center manager, operator and or the facilities manager, engineer need to outline what the benefit potential is vs. the assumed risk of making the change. We can’t continue to say “it might cause a problem” and use that as an excuse to not implement.

We also need to take responsibility for getting through the FUD (Fear Uncertainty & Doubt) that many vendors put out there regarding other products. This is a common issue across IT & MEP (Mechanical Electrical & Plumbing) solutions.

What can the customer do as a group to help reduce the risk, accurately outline the improvement potential and demonstrate the efficiency gains in a meaningful way?

- Take advantage of groups like Data Center Pulse, Green Grid, 7/24, Data Center Dynamics and others to compare notes with other owner/operators.

- Develop knowledge of how and where new solutions are being used by other owners.

- Get involved in the solution. Don’t wait for the vendor to spell out the opportunity for you. If you do, you’re most certainly going to pay more for less.

- Develop roles and responsibilities that clearly define how your data center should be managed and maintained by IT and Facilities staff.

- Take a risk.. Just be smart about it.

Data Center Technology & Thinking is changing more quickly than ever:

It’s extremely important now for owner’s to understand the recent and on-going technology changes and new solutions that are available for implementation in their data centers. Over the last 5 years we’ve experienced more change in what’s available for your data center than we did in the previous 15. If your data center is more than 2 years old there are most certainly major opportunities for you to take advantage of. As a group we have a common responsibility to our businesses and to the environment.

Act a lot, act a little, but please act.

Monday, March 2, 2009

Data Center Pulse & Data Center Certification

The last 3 months have been a combination of tiring and exciting. We created a board of directors for Data Center Pulse, and we developed and then held our first DCP summit, but it was all well worth it. The Summit was a big success. We had over 15% of our membership participate, including new chapters in the Netherlands and India. While the local (Santa Clara) group was working in person we also had people contributing on-line. At the end of the day when we'd compiled our day's work the results were uploaded for our remote chapters to look over. Both the Indian and the Netherlands chapters then added their own content and shipped it back. All this was done in the course of 24 hours (1 full day). On the second day of the summit we presented our findings to the entire group and then after polishing the presentations a little we presenting the findings again to the "Industry" group. On the 3rd day we presented the Top 10 at the Technology Convergence Conference. The response from folks during and after the presentation showed we had struck a nerve. People are realizing that DCP has the potential to fill a gap that has been open for way too long.

I have always enjoyed collaborating instead of working alone and I can tell you the team work and collaboration was outstanding during the summit. We had outstanding participation from some very smart people and the content of our presentations shows it.

One of the tracks from the DCP Summit was "Data Center Certification". This track has now become an on-line project and I'm actively looking for volunteers from Industry and DCP membership. I've already spoken with the DoE about partnering with them and they are interested. I'm also looking at contacting similar groups outside the U.S. If you're interested in participating please send a message to me or through Feedback@datacenterpulse.com.

Check out www.datacenterpulse.org if you’d like to learn more about DCP.

Mark